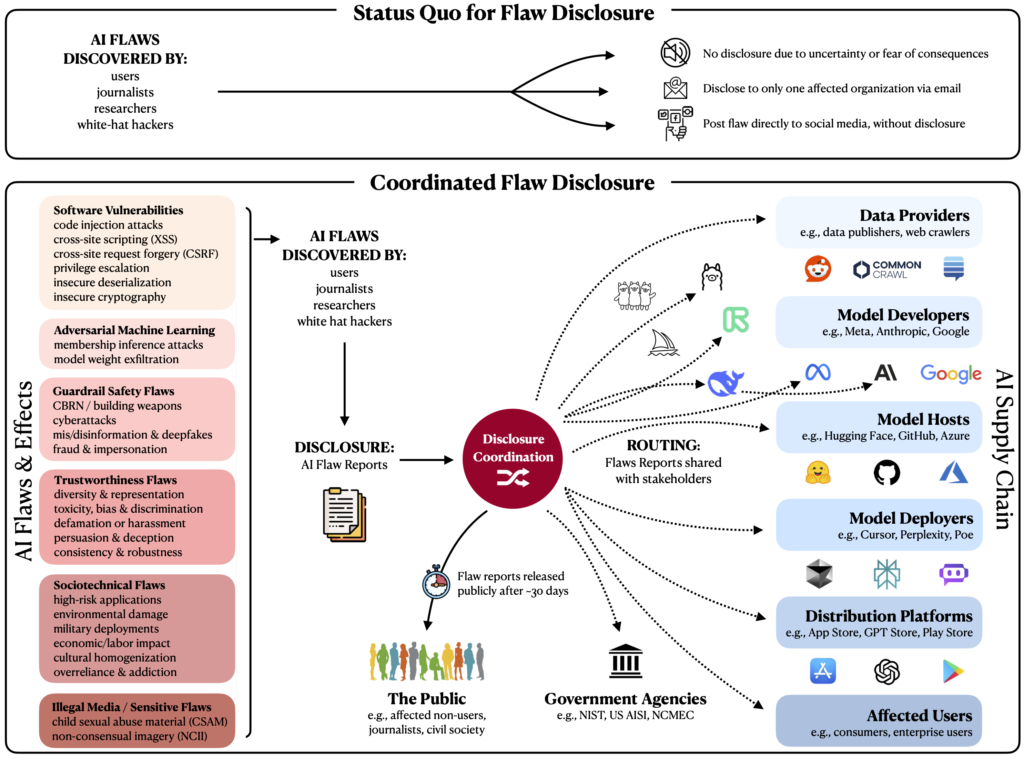

As artificial intelligence (AI) becomes deeply embedded in our daily lives, the safety, transparency, and reliability of these systems have become critical. Recent findings highlight significant vulnerabilities in widely-used AI models, underscoring the urgent need for a robust system to identify and report these flaws responsibly. In response, researchers from leading institutions have proposed a coordinated approach to flaw disclosure (coordinated AI flaw reporting), inspired by cybersecurity best practices.

The problem: AI vulnerabilities and reporting challenges

Recent cases, such as the troubling GPT-3.5 flaw that exposed personal data, illustrate the serious risks posed by unaddressed AI vulnerabilities. Researchers discovered that prompting the model to repeat words excessively led it to produce incoherent text, leaking sensitive personal information from its training data. Although swiftly resolved, this incident is a stark reminder of the “Wild West” environment around AI flaw disclosures, where significant vulnerabilities may remain hidden due to legal fears or unclear reporting pathways.

The absence of structured reporting infrastructure discourages researchers from responsibly sharing discovered vulnerabilities. Consequently, flaws remain unaddressed or are communicated only to select companies, leaving other stakeholders unaware of potentially transferable risks.

The Solution: coordinated AI flaw reporting

A new initiative from prominent researchers — including MIT, Stanford, Carnegie Mellon, and Princeton — advocates for a structured flaw-reporting ecosystem. Their proposal, detailed in the paper “In-House Evaluation Is Not Enough: Towards Robust Third-Party Flaw Disclosure for General-Purpose AI,” calls for three primary actions:

1. Standardized and coordinated AI flaw reporting

The initiative introduces standardized reports, analogous to software security disclosures, that streamline flaw documentation. Reports should clearly describe the flaw, reproduction steps, violated policies, and statistical metrics to assess the prevalence of the vulnerability.

2. Safe harbor and legal protections

AI developers must provide clear legal protections for third-party evaluators engaged in responsible flaw discovery. Current broad terms of service often hinder researchers by threatening legal consequences for “reverse engineering” or automated data collection. The proposal recommends explicit safe-harbor policies that shield researchers from legal retaliation when acting in good faith.

3. Centralized disclosure coordination center

The establishment of a centralized “Disclosure Coordination Center” is proposed to manage and route flaw reports across the AI ecosystem. Such a center would triage vulnerabilities, coordinate responses among stakeholders, and handle sensitive disclosures appropriately, preventing premature public exposure while ensuring timely remediation.

Bridging AI Governance with responsible innovation

Adopting coordinated flaw reporting aligns perfectly with the principles of Responsible AI like transparency, fairness, accountability, and safety. It complements regulatory frameworks such as the EU AI Act, enhancing compliance by systematically addressing risks and promoting transparency throughout AI systems’ lifecycles.

AI governance frameworks that embrace coordinated flaw disclosure will build greater trust among consumers, developers, and regulators, driving broader societal adoption. This initiative supports AI for Good by ensuring that AI’s societal, economic, and environmental benefits are not overshadowed by preventable harms.

Coordinated AI flaw reporting: join the Responsible AI revolution

As businesses and organizations integrate AI, proactive engagement with responsible disclosure practices is not merely compliance—it’s strategic foresight. Companies are encouraged to:

- Implement standardized flaw reporting procedures.

- Establish clear, protective legal frameworks for external researchers.

- Collaborate with the broader AI community through dedicated flaw reporting platforms.

By doing so, organizations will enhance their AI strategies, fortify governance, and remain resilient against emerging risks.

As an AI Governance and Strategy specialists, our commitment is to guide businesses through this evolving landscape, ensuring that their AI journeys are both innovative and responsible. Together, we can build AI systems that are transparent, trustworthy, and aligned with society’s values and regulatory expectations.

Let’s start the conversation on coordinated flaw reporting today. Responsible AI isn’t just an ideal ⇒ it’s essential for our future.